Making sense out of $10X=9.999\dots$

For technical reasons, subindices cannot be used. Please be mindful of this when seeing expressions such as $a1$ or $bn$.

The controversial identity

The identity $0.999\dots=1$ seems to be a controversial one, at least in the math-oriented demographics on the internet. Some people argue that $0.999...$ cannot be equal to $1$, since it sits ever so close but never reaching $1$. One can see this by iterating over its decimal digits: $$0.9, 0.99, 0.999, 0.9999, 0.99999, \dots$$ This argument summons -knowingly or otherwise- the existence of surreal numbers, popularised by D. Knuth on his eponymous 1974 book.

While the topic of surreal numbers is undeniably very interesting from the point of view of real analysis (see this article for a general, albeit somewhat technical overview of what “works” and what “does not” when one considers surreal numbers), it fails to address that assuming the field of real numbers when speaking about numbers with decimals is an unspoken convention which is understood by context. Indeed, just like how one does not specify between degrees Celsius or Fahrenheit when discussing the weather in the school cafeteria, one does not specify the real number $0.7316\dots$ during a Calculus I class.

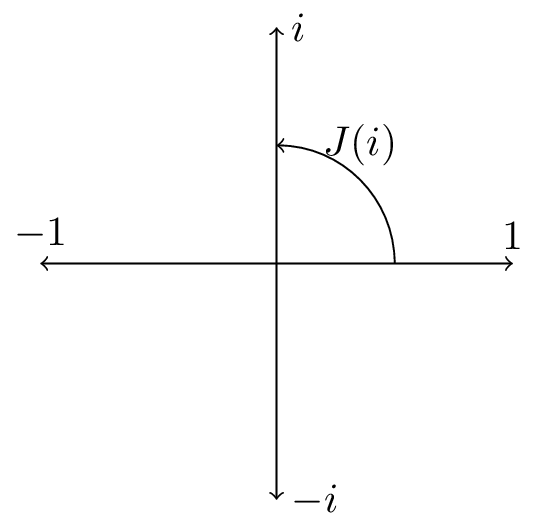

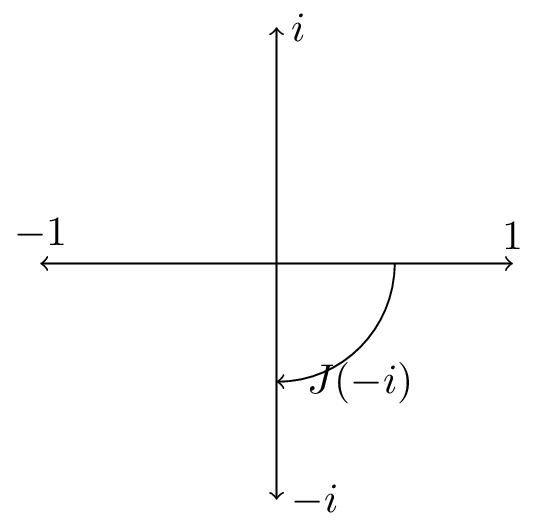

On the other end of the scale, one finds the mathematics popularisers, which most often justify the identity $0.999\dots=1$ by using a geometric argument: if we set $x=0.999\dots$, then $10x=9.999\dots$. The algorithm of subtraction then implies that $9x=10x-x=9$, and thus $x=1$. However, some have pointed out that this argument makes the use of unjustified implicit assumptions. Indeed, the indiscriminate use of the geometric argument can lead to identities such as $1+2+3+\dots=-1/12$ ([1,2,3]).

While this identity has a place in physics and can be interpreted as a valid expression under the correct assumptions, this identity remains as a cautionary tale of how computations are only valid so long as they are framed in their appropriate context. A more approachable example is perhaps how Pythagoras' Theorem states that $a^2+b^2=c^2$, but when solving for $c$, we need to rule out the negative solution since $c$ is meant to be a length, hence $c > 0$.

Making sense out of infinite decimals

We begin by addressing the topic of infinite -repeating or otherwise- decimals. We begin with a number with a finite amount of decimals: $$0.619=0.6+0.01+0.009=6\cdot\frac{1}{10}+1\cdot\frac{1}{100}+9\cdot \frac{1}{1000}$$ We can extend this logic to define a number with infinite, repeating decimals, $$0.999\dots=0.9+0.09+0.009+\dots=\frac{9}{10}+\frac{9}{100}+\frac{9}{1000}+\dots$$ This defines an infinite sum, which converges to a real number between $0$ and $1$. The same logic can be used with any real number. Moreover, this infinite sum has a special property: it is absolutely convergent.

To see what this means, we consider the alternating harmonic series, $$1-\frac{1}{2}+\frac{1}{3}-\frac{1}{4}+\dots$$ It is well known that if we swap all the $–$ signs for $+$ signs, this sum diverges; it is the well-known harmonic series. Nonetheless, if we leave it as-is, this sum converges to the natural log of $2$. This is called conditional convergence. On the other hand, the sum $$1-\frac{1}{2^2}+\frac{1}{3^2}-\frac{1}{4^2}+\dots$$ converges regardless of the signs. This is called absolute convergence.

In particular, the number $0.a1a2a3\dots$ can be written as the infinite sum $$ \frac{a1}{10} + \frac{a2}{100} + \frac{a3}{1000}+\dots $$ where $a1, a2, a3, \dots $ is an integer between $0$ and $9$. Therefore, any number defines an absolutely convergent infinite sum.

When it is allowed to use the subtraction algorithm

The algorithm of subtraction with carryover does not behave well for numbers with infinite, non-repeating digits (such as $\pi$), since this algorithm require us to start at the rightmost digit (which does not exist in a number with infinitely many decimals). The algorithm of subtraction without carryover does work when both numbers have a finite sequence of repeating decimals, such as $0.545454\dots$ (which we shall denote $0.[54]$ for simplicity) or $0.888\dots$.

We shall denote the number $0.a1a2\dots ana1a2\dots an\dots$ as $0.[a1\dots an]$.

Theorem. Let $$S1=a1+a2+a3+\dots;\quad S2=b1+b2+b3+\dots$$ be absolutely convergent infinite sums. Then, $$S1-S2=(a1-b1)+(a2-b2)+(a3-b3)+\dots$$ In particular, if $x=0.[a1\dots an]$ and $y=0.[b1\dots bn]$ with $a1 \geq b1$, ..., $an \geq bn$, then $$x-y=0.[(a1-b1)\dots (an-bn)].$$

Note that we have assumed for simplicity that all numbers are between $0$ and $1$, but any real number $x > 1$ can be written as $x=n+0.a1a2a3...$ for some natural number $n$. Thus the theorem above works for, say, $42.[54]$.

There is another result we still need to finally justify the popular method used to prove that $0.999\dots=1$:

Theorem. Let $$S=a1+a2+a3+\dots$$ be an absolutely convergent infinite sum, and $\alpha$ be a real number. Then, $$\alpha S=(\alpha a1)+(\alpha a2)+(\alpha a3)+\dots$$

Observe that neither of these theorems holds without the condition of absolute convergence. If we choose $S=0.[54]$ and $\alpha=100$, then $$\alpha S=100\left(\frac{5}{10}+\frac{4}{100}+\frac{5}{1000}+\frac{4}{10000}+\dots\right)=$$ $$=5\cdot 10+4\cdot 1+\frac{5}{10}+\frac{4}{100}+\frac{5}{1000}+\frac{4}{10000}+\dots=54.[54].$$ More generally, if $S=0.[a1\dots an]$ and $\alpha=10^n$, then $\alpha S=a1\dots an.[a1\dots an]$. This not only justifies the step used in the title (that $10x=9.999\dots$), but it also justifies the algorithm see in middle school to turn an infinitely repeating decimal number to fraction form!

Conclusion

Despite my best intentions, this has turned out to be a rather lengthy and technical explanation. I can only hope that the examples left out along the way (and more importantly, the references) are enough for a high school student with an interest in mathematics to grasp the gist of what is going on.

At its core, the problem with $0.999\dots$ is that it is a subtle way to write a limit, an expression that infinitely approaches a value. Zeno's arrow paradox echoes in the words of those who struggle to grasp the idea that $0.999\cdot$ can be equal to $1$, as indeed how can a number which stands apart from $1$ at each step could somehow reach that value.

There are indeed mathematical models where this can and does happen, but the real numbers have a property known as sequential closure, meaning that a series of numbers which inches ever-so-close to a fixed value $x$ must inevitably be equal to $x$.